Convolutional Neural Network

in Notes / Artificialintelligence / Introductiontoai

DAY 13

Introduction

- (참고) RNN Skipped

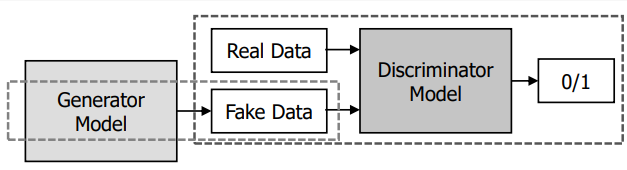

GAN: Generative Adversarial Network

- Training both of generator and discriminator

- -> generates samples similar to the original samples

| generator | discriminator |

|---|---|

| making something from scratch (FAKE DATA) | determines whether such data is GOOD/BAD |

- Both generator and discriminator’s performance improves via competitions

- ex: 위조지폐 생성실력과 경찰의 분류 및 검증능력이 날이 갈수록 발전하는 것 처럼

- REAL DATA required for the discriminator in order to successfully classify fake data

- Classifies ( 0 / 1 ) => Same as Binary Classification

DIFFERENCE FROM OTHER AI MODELS:

NO INPUT - still, it DOES require any form of input for implementation

- Noise is used

Goal of Generative Model

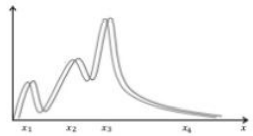

- Find a \(p_{model}(x)\) that approximates \(p_{data}(x)\) well

- \(p_{data}(x)\) : represents distribution of actual images

- \(p_{model}(x)\) : of fake data, needs to be as close to \(p_{data}(x)\)

- \(Đ(X)\) = 1 (or close to): Training with real data \(X\) should be high.

- \(Đ(G(X))\) = 0 (or close to): Generated(\(G(\quad)\)) with noise data (\(z\)) should be low

Objective of D :

- \(D\) should

maximize V(D,G) - First Part (\(E_{x \tilde p_{data}(x)}[logD(x)]\)): Maximize when \(D(x) = 1\)

- Second Part (\(E_{z \tilde p_{z}(z)}[log(1-D(G(z)))]\)) : Maximaize when \(D(G(z))=0\)

- \(D\) should

Objective of G :

- \(G\) should

minimize V(D,G) - => Tries to make Fake Data PASS the discriminator (D)

- First Part : NOT REQUIRED since Generator (G) will not have access to REAL DATA

- Second Part (\(E_{z \tilde p_{z}(z)}[log(1-D(G(z)))]\)) : Minimum when \(D(G(z))=1\)

- \[\min_{G} E_{z \tilde p_z(z)}[log(1 - D(G(z)))]\]

- \[= \max_{G} E_{z \tilde p_z(z)}[log(D(G(z)))]\]

- latter is used since it converges quicker (according to Statistic Experts)

- \(G\) should